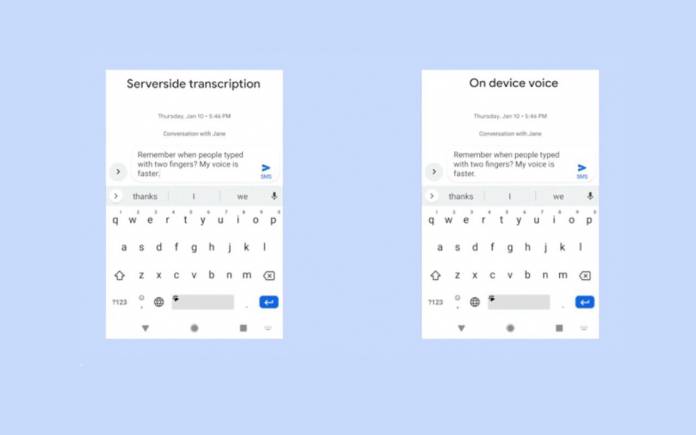

On-device AI has been made known to us for several years but it’s only now OEMs are getting serious in implementing the technology. Google has recently announced an all-neural on-device speech recognizer that won’t depend much on a network. This means end-to-end speech recognition happens in the device as made possible by RNN transducer (RNN-T) technology. What happens is that the speech recognizer resides inside a device, making it powerful enough for speech input in Gboard. It is described as very compact enough to be stored on a phone.

The idea is that the speech recognizer is available all the time whether offline or online. Words are checked character-by-character so they are checked and recognized in real-time.

It works like a keyboard dictation system, only, it listens to your voice. Google says spottiness or network latency is reduced.

This new system is known as Recurrent Neural Network Transducer (RNN-T). It is described as a sequence-to-sequence model that doesn’t employ attention mechanisms. It processes input samples continuously streams output symbols for speech dictation.

RNN-T allows offline recognition. This is possible as models are hosted directly on the device. Search happens in a single neural network that has been RNN-T-trained and is very light at 80MB.

This all-neural and on-device Gboard speech recognizer will be ready on all Pixel models. It will be American English only for now but expect other languages will be available in the near future.

SOURCE: Google AI Blog