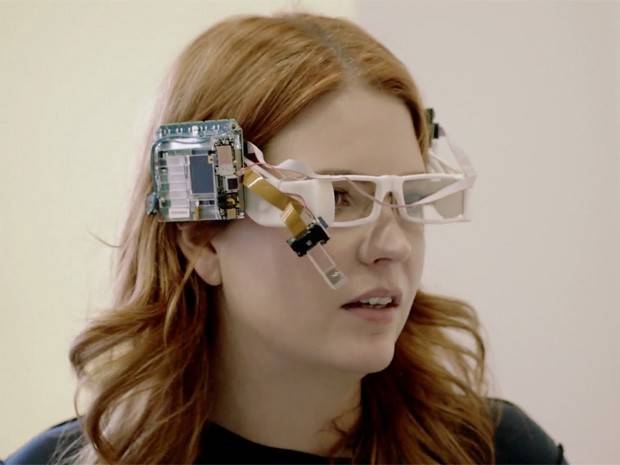

It started in 2011, when the Google Glass project gave its engineers some challenges regarding the camera rigged onto the pair of glasses. The Gcam project was built specifically to solve Google Glass’s imaging issues, but it soon became apparent that the project was meant for bigger and better things.

Google Glass had a problem, the camera on the wearable was small – because it was eyewear and a bigger lens was not an option. It also had very little computing power, and it utilized a mobile camera sensor, which all pointed to crappy, high-contrast images, especially in low-light situations. Enter Marc Levoy, who led his team to approach the problem from the software side of things. The rest is history.

![]()

The solution was to take a rapid sequence of shots and then fuse them to create a single, higher quality image. We know this technique as HDR in today’s terms, but this was not easily available for mobile devices in 2011, especially for Google Glass. The technique gave allowed Glass users to take images with greater detail, greater clarity and brighter, sharper pictures overall.

The technology was such a big hit that it debuted as HDR+ in the Nexus 5 and the Nexus 6 in 2014. And now very recently, Gcam’s HDR+ technology was used in Google’s revolutionary new smartphone – the Pixel. The results speak for itself – DxOMark rated the Google Pixel as having “the best smartphone camera ever made” in the year 2016. It still sits on top of the rankings as of today.

SOURCE: X Blog