We’ve been hearing previews and leaks about this new feature for the Pixel devices, but now we have official confirmation that Google Lens is finally rolling out for made by Google devices and that it works well together with the Google Assistant. With this new tool, you’ll be able to find out more about the things around you without having to type a word. The visual search tool is initially available only for Pixel devices and for the English language, but hopefully, it will expand to other Android smartphones and add support for other languages as well.

If you don’t know yet how Google Lens functions, it basically turns your camera into a visual search tool and if you partner it with the deep machine learning of Google Assistant, then you get a feature that should make it easier for you to learn about the things that you see around you, whether it’s a photo or an actual object. The tandem of Lens and Assistant can help you in things like saving information from business cards without having to type and copy, follow URLs, call phone numbers, and open addresses on Maps, just by pointing your Lens at whatever has these images or information.

You can also use Google Lens to learn more about a city or place you’re visiting for the first time. You can also find out more about pop culture stuff, like movie reviews before you decide to buy a ticket at the theater, or book reviews when you’re trying to choose which book to buy at the bookstore. You can also use your Lens to scan barcodes and QR codes and your Assistant will do the rest.

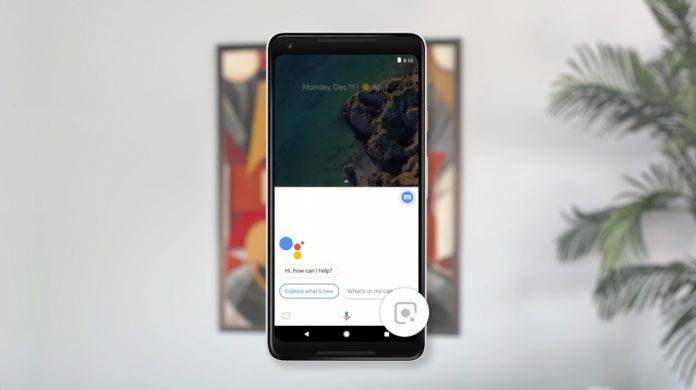

Google Lens in the Assistant is already rolling out to Pixel devices in the US, UK, Australia, Canada, India, and Singapore but it only currently supports the English language. The Google Lens icon is located at the bottom right corner of your Google Assistant interface. Hopefully, it will eventually be available on other devices as well.

SOURCE: Google