At the recently concluded I/O developers’ conference, Google spent a bit of time focusing on its artificial intelligence feature called Google Lens. Not only did we get new and improved features, but we also finally had more devices that received the image recognition tool support on their device cameras instead of just the Google Photos built-in camera. They have finally released an updated version of the feature and along with it comes a cleaner and whiter user interface so it will look even more like a Google product. The real-time detection feature is also now becoming available (slowly) for those smartphones that already have Google Lens.

The camera feature is actually a pretty useful tool for when you want to do visual searches about the things around you and you don’t have the words to do a proper search. The problem was that not a lot of devices have it, aside from the Google Pixel and some high-end Samsung devices. And while the integration to Google Photos last year was pretty helpful, you still would want to access it from your smartphone’s camera directly. Well the good news for those who have the newer devices from OEMs like LG, Motorola, Xiaomi, Sony Mobile, HMD/Nokia, Transsion, TCL, OnePlus, BQ, and Asus is that you can now just open your camera and Google Lens should be working just fine.

As for the new features that were also announced and is now rolling out, the most interesting is real-time detection. Previously, you had to stop and tap on the screen before Lens can recognize it and give you information about the object that you’re interested in. Now when you pan around a room, it will show a colored dot on things that it recognizes as items and if you want to know more about it, just tap on it and the information will pop up from below. You also have smart text selection which should show you relevant information and additional photos for things like recipes, restaurant menus, etc. You also now have style match which is great for clothes, accessories, etc as it will show you other things like it that you may be interested in.

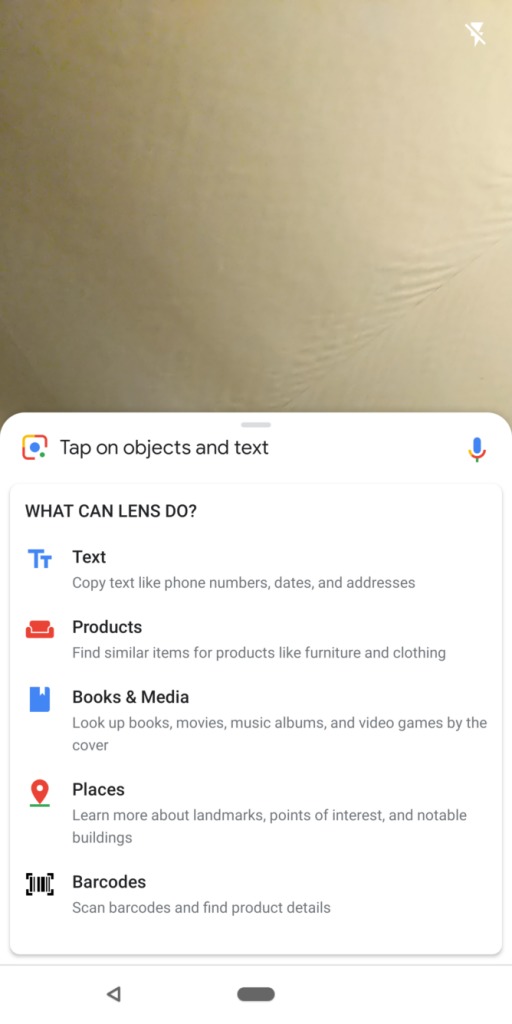

For those who already had Google Lens before on their smartphone, what you’ll notice is that there’s a new rounded card at the bottom of the screen. Previously, you just had a gradient to black overlay and then big bubble button for actions. You’ll also see the white UI when you pull up the screen so it gives you examples and instructions on what it will be able to provide you. The examples indicated are:

Text (copy phone numbers, dates, addresses)

Products (find similar items to clothing and furniture, hopefully, more in the future)

Books & Media (identify what it is based on the cover or image and also links as to where to buy them)

Places (learn more about landmarks, buildings, scenery, etc)

Barcodes (scan them to get more details)

As with a lot of things, there’s always more room for improvement for Google Lens, especially now that more Android users will be able to access it from their device’s default camera. They removed the “Remember this” or “Import to Keep” shortcuts so hopefully in the next update, they’ll replace it with something better. The update is server-side so you’ll just have to wait for when it rolls out for you.

VIA: Android Police