You would think that technology is something that you cannot accuse of being racially biased. But according to a research done by Joy Buolamwini from the Media Lab of MIT, facial recognition technology actually favors accurate identification of white men. This is not because computers are racists, but due to the data sets that are presented to it as well as the conditions on which the algorithm are created. That’s why she’s now pushing for “algorithmic accountability” so developers and software engineers can make automated decisions more “transparent, explainable, and fair”.

There have already been previous studies that show how technology can be racially biased, with facial recognition software being more accurate (99% of the time actually) when the photo is of a white man and the errors keep rising (up to 35% in most cases) the darker the skin of the subject in the photo. There seems to be no intentional bias, but there needs to be more intentionality when it comes to the data we use to train artificial intelligence software. Since there are more white men in the system, then it follows that it won’t be smart enough to detect women and people of color.

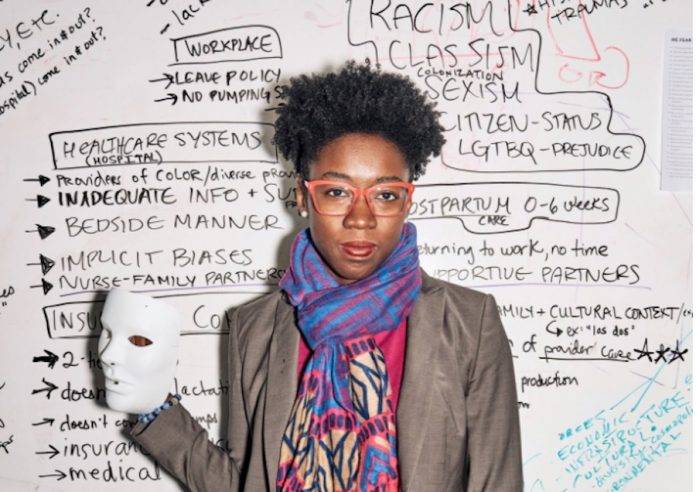

Buolamwini experienced this firsthand when she was still an undergraduate and programs would work well on her white friends but not on her. And so she founded the Algorithmic Justice League project to raise awareness about how companies and developers working on AI should have algorithmic accountability. Her study focused on three companies – Microsoft, IBM, and Megvii (from China) – because they offer gender classification features and their code is available to the public for testing.

After she shared her findings with all three, IBM said that they are improving their facial analysis software and will roll out an improved service this month. Microsoft said they are investing in research so they will be able to recognize, understand, and eventually remove bias in facial recognition. Megvii has yet to comment on it. To read her entire paper, click here.

SOURCE: New York Times