Weeks after a hate attack in New Zealand which left 50 people dead, Facebook is finally putting its foot down and start labeling white nationalist and separatist content as hate speech. The platform has drawn widespread criticism for its lax moderation when it comes to these kinds of content from their users and the attack in Christchurch was even live-streamed through Facebook as it happened. But now the social media giant is finally revising its earlier policy on this kind of representation on both Facebook and Instagram.

In a blog post, Facebook explained that while they have a long-standing policy against white supremacist groups and content, they initially considered white nationalism and separatism as expressions of the broader concepts of nationalism “which are an important part of people’s identity”. But after further study of the matter and through conversations with members of civil society and academics, they have “confirmed” that they are intrinsically linked to white supremacy and organized hate groups.

People will still be allowed to “demonstrate pride” in their ethnic heritage but praise and support for white nationalism and supremacism will not be allowed anymore and users can report such content as hate speech on both Facebook and Instagram. They have also started applying the same machine learning and artificial intelligence tools that they used to weed out terrorism on their platforms to detect and ban hate groups globally.

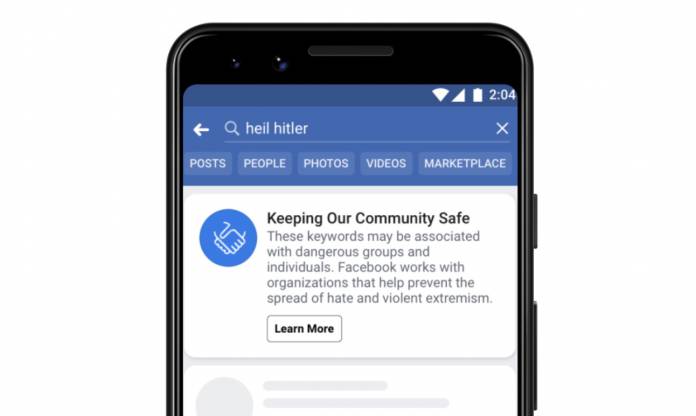

Those users who start searching for terms related to white supremacy will also be redirected to resources like Life After Hate, an organization that is actually run by former violent extremists. They have crisis prevention, education, support groups, and outreach which deal with race and supremacism issues.

Now as to whether their moderation of white nationalists and separatists will be effective enough is still a question that Facebook has been unable to answer. They admit that there are still users out there that will try to “game” their systems but they will be continuously trying to improve their policies and the technologies used to monitor these things.

SOURCE: Facebook