As we’re all aware of by now, Facebook can be used by people to deal with their depression, either by expressing what they’re feeling or having someone to talk to if they can’t be with other people in person. But there are also instances when some have expressed suicidal thoughts and in some extreme cases, actually used Facebook to write their suicide notes or even use Facebook Live to harm themselves. The social media giant has been taking steps to help detect those that need help, and now they’re adding AI into the mix.

They have actually been using artificial intelligence to detect posts that may have been hinting at suicide in the US but now they’re expanding it to the rest of the world, except in the European Union (because of their privacy laws). What it does is proactively detect posts that may be talking about self-harm and suicide by using pattern recognition technology. The tech looks at text in the post itself and comments like “Are you ok?” or “Can I help?”. Previously, someone had to report a post or account that seemed suicidal before Facebook could act on it.

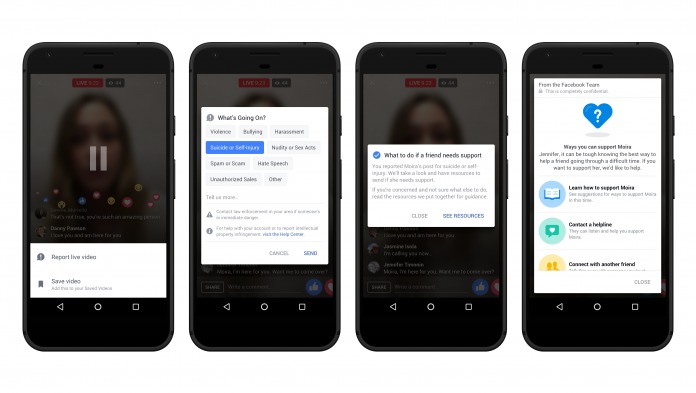

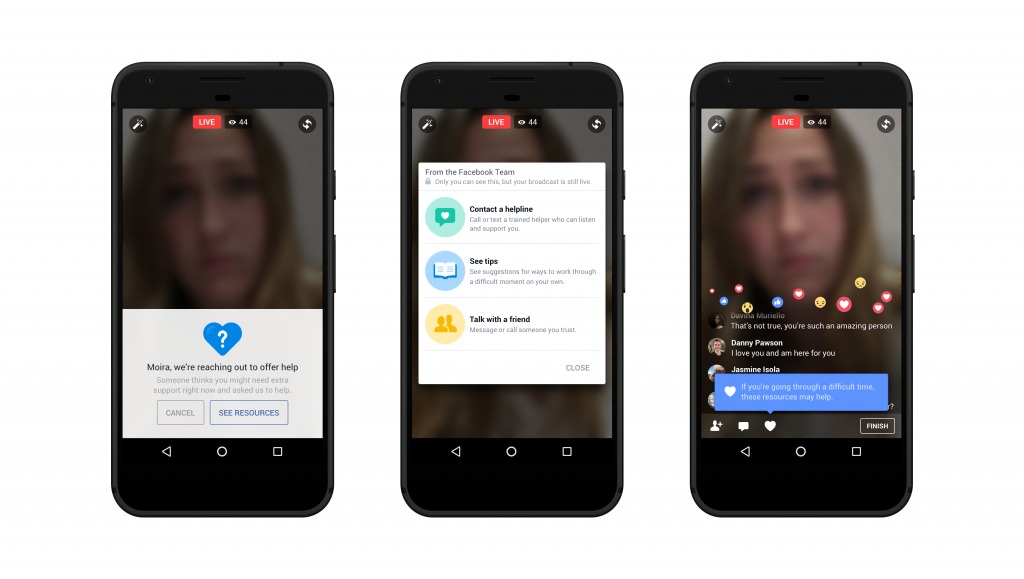

They combine this with human reviewers and first responders so that the posts can also be analyzed in context. This ensures that they get resources for people who seem to be in distress, whether by asking friends or family members to check up on them or connecting them to local crisis hotlines or organizations that can help them. You can also still report a post or account who you think might need help and Facebook will reach out to them directly.

There are of course privacy issues that might arise from an AI going through people’s posts, and Facebook’s chief security officer, Alex Stamos admits that malicious use of AI will always be there but they are committed to “weighing data use versus utility”. Hopefully, this will be a big help from people struggling with these issues and that they will get the help they need.

SOURCE: Facebook