Facebook has been courting the content creator communities for years now, bringing tools and features that can help them create more content for the platform and reach a wide audience on their various services. But along with features, it’s also important that they come up with tools to help them in terms of moderation, protection, and customer support. This week, Facebook has announced some updates as part of their efforts to build a better and safer platform for creators as well as communities within that platform.

Comment moderation is a painstaking but necessary job for creators especially those that already have a big audience. Previously, they brought the hide unwanted comments tool for their posts but now they’re making it easier to do this. The hide action is now next to each comment so they can do it with one click. There’s also the option to easily view all of the hidden comments by changing the comment filter view.

When it comes to keyword blocking, you will be able to hide comments with combinations of words, numbers, symbols, or different spellings. Facebook is also testing out Moderation Assist which creators can use to set criteria so comments can be automatically moderated. For Facebook Live, soon we’ll be getting more comment moderation tools including profanity keyword blocking, suspending/banning users, and stronger comment controls. They will soon be testing community moderation so creators can assign a viewer to moderate comments for them.

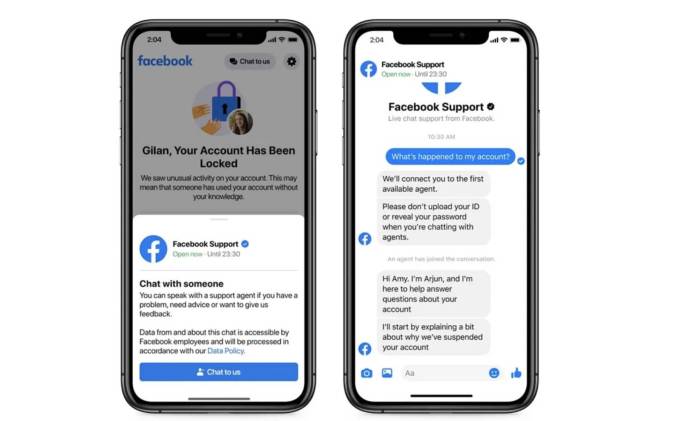

For creators that don’t have an assigned relationship manager, Facebook will soon be testing out support through live chat in English. There will be a dedicated creator support site where they can chat with a support agent on various issues relating to Facebook or Instagram. They’re also now testing out live chat for English-speaking users around the world who have been locked out of their accounts, specifically due to unusual activity or a supposed violation of the Facebook Community Standards.

Facebook is also piloting Safety School, a webinar that is focused on informing creators on the trust and safety tools that is available for them. This is available for creators in more than 27 countries and they will be expanding the program and resources to more people soon.