Augmented reality devices and apps aren’t household items just yet but we can expect it to gain even more traction over the next few years. Tech companies like Google have been working hard to make AR objects more “real” rather than just that weird thing that appears on your phone’s screen. Just this month, they announced that ARCore phones will now be able to detect depth with a single lens. Now we’re seeing the result for end users with a new thing called “object bleeding” which you can experience in Google Search AR.

According to 9 to 5 Google, the single RGB camera allows the ARCore Depth API to leverage depth-from-motion algorithms. The technical definition is “multiple images from different angles and comparing them as you move your phone to estimate the distance to every pixel.” Because of this, occlusion can now happen which is when digital objects can appear in front of or behind objects in real life.

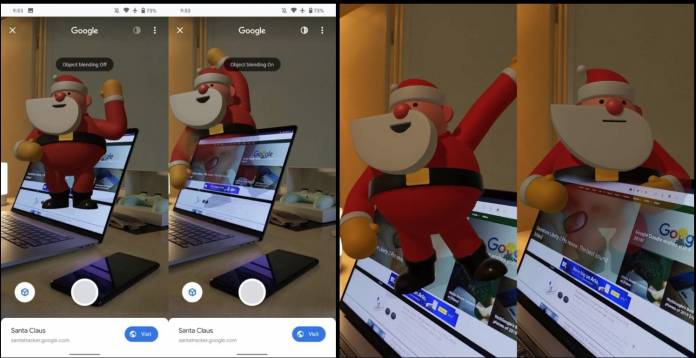

This object blending now appears in Google Search when you’re looking for 3D objects on your AR-supported devices. You’ll see a half-shaded circular icon on the top right corner. You’ll also see an object blending on or off-chip underneath the object. If you launch it for the first time, Google will tell you “The object adapts to your environment by blending in with the real world.”

At least you now won’t be able to see virtual cats floating in your actual living room. We might be getting close to the point where you won’t be able to distinguish which are the real objects and the virtual objects when viewing AR. Well, that can be both scary and fun but at least we will be able to get more realistic and immersive experiences with our AR apps or games.

Google Search AR’s object blending has now rolled out to around 200 million ARCore supported Android devices. But if you don’t see it yet, just wait as it is not yet widely available for everyone.