At I/O 2017, Google reviewed how its new “AI first” vision permeates the latest big features in its products. More than just abstract, cold computations, Google employed machine learning techniques to empower newer computer interaction methods, namely voice and vision. Voice is already something we’ve heard (no pun intended) a lot of thanks to today’s virtual personal assistants. Now Google is putting the focus on computer vision with a new machine learning-empowered Google Lens, coming soon to smartphones near you.

If you’ve been following news about Samsung’s Bixby, the idea of Google Lens is probably going to be familiar. In a nutshell, Google Lens uses your phone’s camera to take a snapshot of a real-world object and then uses machine learning to identify what it is, like identifying a particular flower or plant.

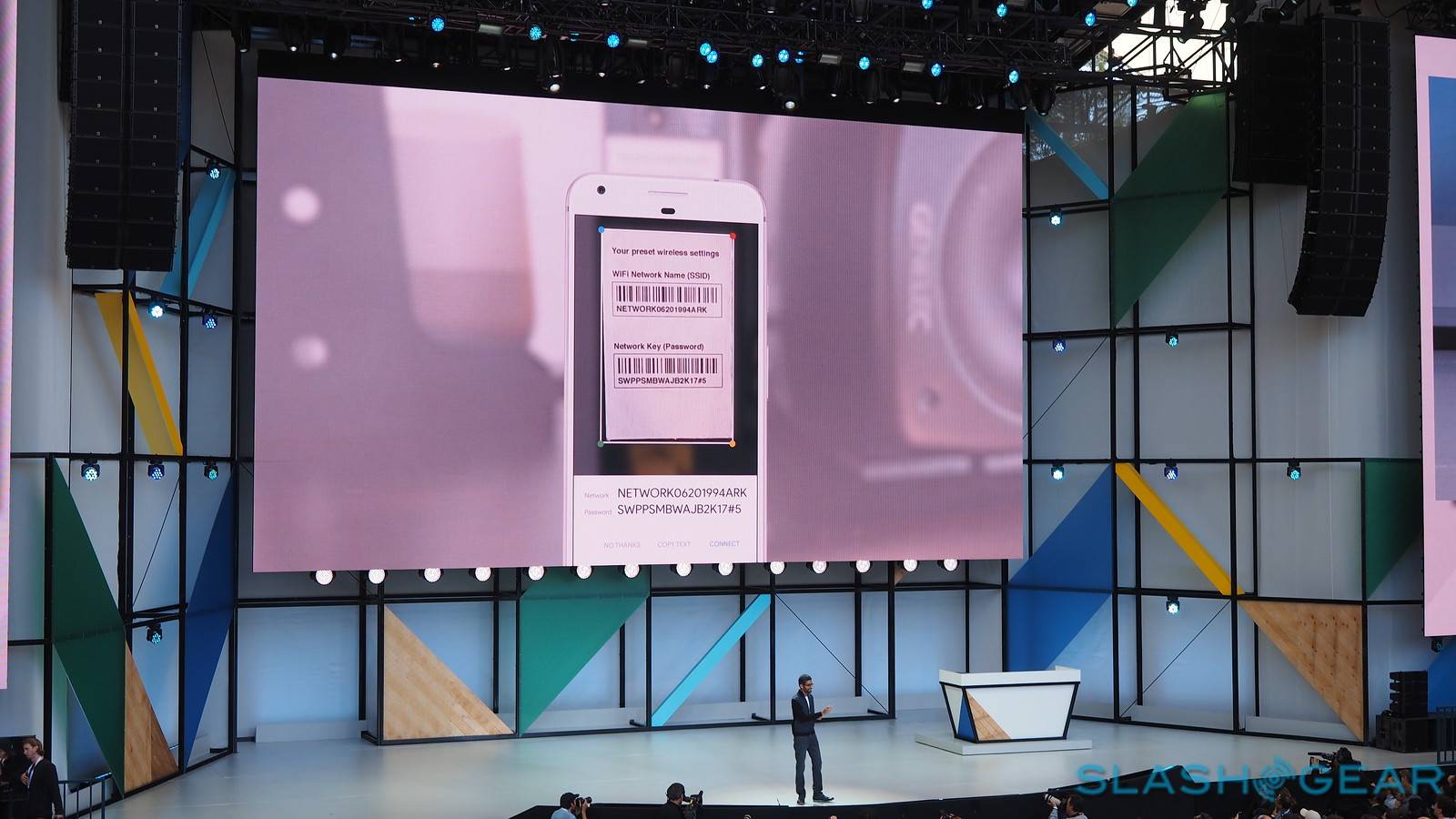

But that’s only half the story. What it does with that information is where the magic happens. Point the camera at a Wi-Fi access code and it will automatically connect you to Wi-Fi.

Put an establishment’s name into focus and Google Maps will pull in the necessary info for you.

And it doesn’t stop there. Google promises that soon, its camera app will be able to magically remove obstructions from a photo, putting what matters most front and center. Or off-center, if that’s the case.

AI, machine learning, and deep learning have mostly been abstract ideas or scary fields. With products like Google Assistant and, soon, Google Lens, Google has given machine learning a less mechanical face.